The Cairo Book

By the Cairo Community and its contributors. Special thanks to StarkWare through OnlyDust, and Voyager for supporting the creation of this book.

This version of the text assumes you’re using Cairo version 2.11.4 and Starknet Foundry version 0.39.0. See the Installation section of Chapter 1 to install or update Cairo and Starknet Foundry.

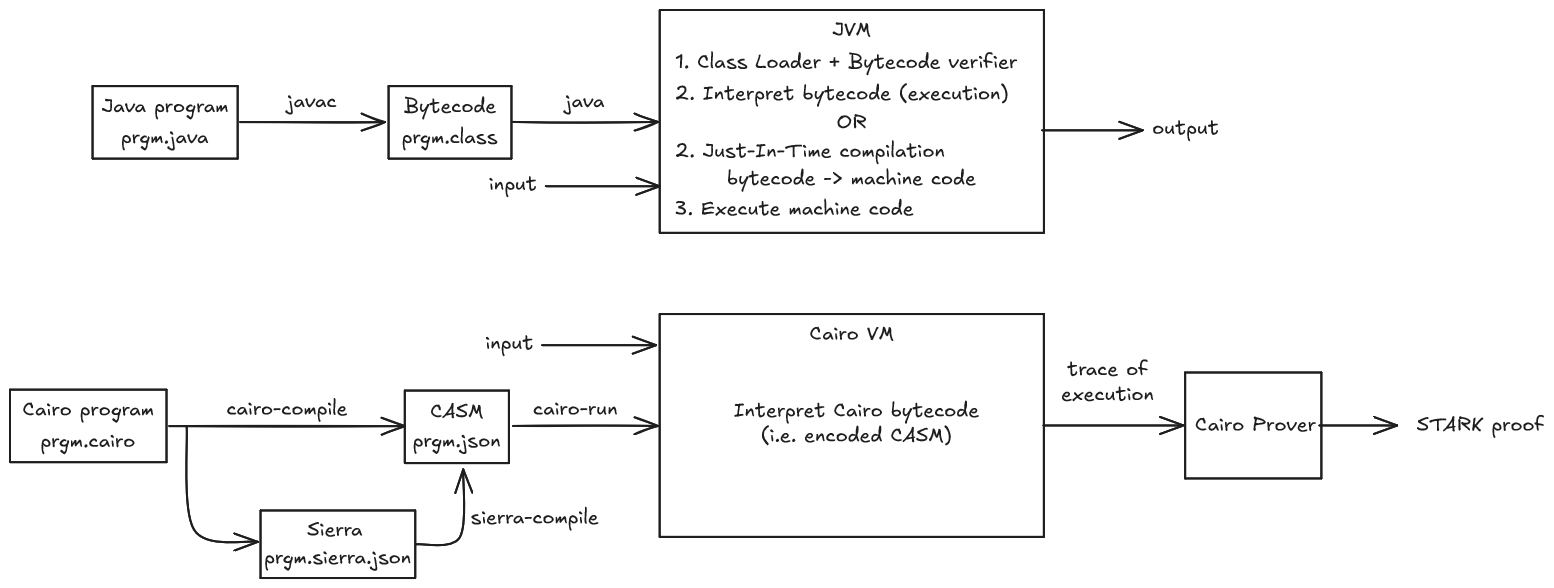

While reading this book, if you want to experiment with Cairo code and see how it compiles into Sierra (Intermediate Representation) and CASM (Cairo Assembly), you can use the cairovm.codes playground.

This book is open source. Find a typo or want to contribute? Check out the book's GitHub repository.

Foreword

Zero-knowledge proofs have emerged as a transformative technology in the blockchain space, offering solutions for both privacy and scalability challenges. Among these, STARKs (Scalable Transparent ARguments of Knowledge) stand out as a particularly powerful innovation. Unlike traditional proof systems, STARKs rely solely on collision-resistant hash functions, making them post-quantum secure and eliminating the need for trusted setups.

However, writing general-purpose programs that can generate cryptographic proofs has historically been a significant challenge. Developers needed deep expertise in cryptography and complex mathematical concepts to create verifiable computations, making it impractical for mainstream adoption.

This is where Cairo comes in. As a general-purpose programming language designed specifically for creating provable programs, Cairo abstracts away the underlying cryptographic complexities while maintaining the full power of STARKs. Strongly inspired by Rust, Cairo has been built to help you create provable programs without requiring specific knowledge of its underlying architecture, allowing you to focus on the program logic itself.

Blockchain developers that want to deploy contracts on Starknet will use the Cairo programming language to code their smart contracts. This allows the Starknet OS to generate execution traces for transactions to be proved by a prover, which is then verified on Ethereum L1 prior to updating the state root of Starknet.

However, Cairo is not only for blockchain developers. As a general purpose programming language, it can be used for any computation that would benefit from being proved on one computer and verified on other machines. Powered by a Rust VM, and a next-generation prover, the execution and proof generation of Cairo programs is blazingly fast - making Cairo the best tool for building provable applications.

This book is designed for developers with a basic understanding of programming concepts. It is a friendly and approachable text intended to help you level up your knowledge of Cairo, but also help you develop your programming skills in general. So, dive in and get ready to learn all there is to know about Cairo!

Acknowledgements

This book would not have been possible without the help of the Cairo community. We would like to thank every contributor for their contributions to this book!

We would like to thank the Rust community for the Rust Book, which has been a great source of inspiration for this book. Many examples and explanations have been adapted from the Rust Book to fit the Cairo programming language, as the two languages share many similarities.

Introduction

What is Cairo?

Cairo is a programming language designed to leverage the power of mathematical proofs for computational integrity. Just as C.S. Lewis defined integrity as "doing the right thing, even when no one is watching," Cairo enables programs to prove they've done the right computation, even when executed on untrusted machines.

The language is built on STARK technology, a modern evolution of PCP (Probabilistically Checkable Proofs) that transforms computational claims into constraint systems. While Cairo's ultimate purpose is to generate these mathematical proofs that can be verified efficiently and with absolute certainty.

What Can You Do with It?

Cairo enables a paradigm shift in how we think about trusted computation. Its primary application today is Starknet, a Layer 2 scaling solution for Ethereum that addresses one of blockchain's fundamental challenges: scalability without sacrificing security.

In the traditional blockchain model, every participant must verify every computation. Starknet changes this by using Cairo's proof system: computations are executed off-chain by a prover who generates a STARK proof, which is then verified by an Ethereum smart contract. This verification requires significantly less computational power than re-executing the computations, enabling massive scalability while maintaining security.

However, Cairo's potential extends beyond blockchain. Any scenario where computational integrity needs to be verified efficiently can benefit from Cairo's verifiable computation capabilities.

Who Is This Book For?

This book caters to three main audiences, each with their own learning path:

-

General-Purpose Developers: If you're interested in Cairo for its verifiable computation capabilities outside of blockchain, you'll want to focus on chapters 1-12. These chapters cover the core language features and programming concepts without diving deep into smart contract specifics.

-

New Smart Contract Developers: If you're new to both Cairo and smart contracts, we recommend reading the book front to back. This will give you a solid foundation in both the language fundamentals and smart contract development principles.

-

Experienced Smart Contract Developers: If you're already familiar with smart contract development in other languages, or Rust, you might want to follow this focused path:

- Chapters 1-3 for Cairo basics

- Chapter 8 for Cairo's trait and generics system

- Skip to Chapter 15 for smart contract development

- Reference other chapters as needed

Regardless of your background, this book assumes basic programming knowledge such as variables, functions, and common data structures. While prior experience with Rust can be helpful (as Cairo shares many similarities), it's not required.

References

- Cairo CPU Architecture: https://eprint.iacr.org/2021/1063

- Cairo, Sierra and Casm: https://medium.com/nethermind-eth/under-the-hood-of-cairo-1-0-exploring-sierra-7f32808421f5

- State of non determinism: https://twitter.com/PapiniShahar/status/1638203716535713798

Getting Started

Let’s start your Cairo journey! There’s a lot to learn, but every journey starts somewhere. In this chapter, we’ll discuss:

- Installing Scarb, which is Cairo's build toolchain and package manager, on Linux, macOS, and Windows.

- Installing Starknet Foundry, which is the default test runnner when creating a Cairo project.

- Writing a program that prints

Hello, world!. - Using basic Scarb commands to create a project and execute a program.

Getting Help

If you have any questions about Starknet or Cairo, you can ask them in the Starknet Discord server. The community is friendly and always willing to help.

Interacting with the Starknet AI Agent

Starknet proposes its own AI agent designed to assist with Cairo and Starknet-related questions. This AI agent is trained on the Cairo book and the Starknet documentation, using Retrieval-Augmented Generation (RAG) to efficiently retrieve information and provide accurate assistance.

You can find the Starknet Agent on the Starknet Agent website.

Installation

The first step is to install Cairo. We'll download Cairo through starkup, a command line tool for managing Cairo versions and associated tools. You'll need an internet connection for the download.

The following steps install the latest stable version of the Cairo compiler through a binary called Scarb. Scarb bundles the Cairo compiler and the Cairo language server together in an easy-to-install package so that you can start writing Cairo code right away.

Scarb is also Cairo's package manager and is heavily inspired by Cargo, Rust's build system and package manager.

Scarb handles a lot of tasks for you, such as building your code (either pure Cairo or Starknet contracts), downloading the libraries your code depends on, building those libraries, and provides LSP support for the VSCode Cairo 1 extension.

As you write more complex Cairo programs, you might add dependencies, and if you start a project using Scarb, managing external code and dependencies will be a lot easier to do.

Starknet Foundry is a toolchain for Cairo programs and Starknet smart contract development. It supports many features, including writing and running tests with advanced features, deploying contracts, interacting with the Starknet network, and more.

Let's start by installing starkup, which will help us manage Cairo, Scarb, and Starknet Foundry.

Installing starkup on Linux or MacOs

If you're using Linux or macOS, open a terminal and enter the following command:

curl --proto '=https' --tlsv1.2 -sSf https://sh.starkup.dev | sh

The command downloads a script and starts the installation of the starkup tool, which installs the latest stable version of Cairo and related toolings. You might be prompted for your password. If the install is successful, the following line will appear:

starkup: Installation complete.

After installation, starkup will automatically install the latest stable versions of Cairo, Scarb, and Starknet Foundry. You can verify the installations by running the following commands in a new terminal session:

$ scarb --version

scarb 2.11.4 (c0ef5ec6a 2025-04-09)

cairo: 2.11.4 (https://crates.io/crates/cairo-lang-compiler/2.11.4)

sierra: 1.7.0

$ snforge --version

snforge 0.39.0

We'll describe Starknet Foundry in more detail in Chapter 10 for Cairo programs testing and in Chapter 18 when discussing Starknet smart contract testing and security in the second part of the book.

Installing the VSCode Extension

Cairo has a VSCode extension that provides syntax highlighting, code completion, and other useful features. You can install it from the VSCode Marketplace.

Once installed, go into the extension settings, and make sure to tick the Enable Language Server and Enable Scarb options.

Hello, World

Now that you’ve installed Cairo through Scarb, it’s time to write your first Cairo program.

It’s traditional when learning a new language to write a little program that

prints the text Hello, world! to the screen, so we’ll do the same here!

Note: This book assumes basic familiarity with the command line. Cairo makes no specific demands about your editing or tooling or where your code lives, so if you prefer to use an integrated development environment (IDE) instead of the command line, feel free to use your favorite IDE. The Cairo team has developed a VSCode extension for the Cairo language that you can use to get the features from the language server and code highlighting. See Appendix F for more details.

Creating a Project Directory

You’ll start by making a directory to store your Cairo code. It doesn’t matter to Cairo where your code lives, but for the exercises and projects in this book, we suggest making a cairo_projects directory in your home directory and keeping all your projects there.

Open a terminal and enter the following commands to make a cairo_projects directory.

For Linux, macOS, and PowerShell on Windows, enter this:

mkdir ~/cairo_projects

cd ~/cairo_projects

For Windows CMD, enter this:

> mkdir "%USERPROFILE%\cairo_projects"

> cd /d "%USERPROFILE%\cairo_projects"

Note: From now on, for each example shown in the book, we assume that you will be working from a Scarb project directory. If you are not using Scarb, and try to run the examples from a different directory, you might need to adjust the commands accordingly or create a Scarb project.

Creating a Project with Scarb

Let’s create a new project using Scarb.

Navigate to your cairo_projects directory (or wherever you decided to store your code). Then run the following:

scarb new hello_world

Scarb will ask you about the dependencies you want to add. You will be given two options :

? Which test runner do you want to set up? ›

❯ Starknet Foundry (default)

Cairo Test

In general, we'll prefer using the first one ❯ Starknet Foundry (default).

This creates a new directory and project called hello_world. We’ve named our project hello_world, and Scarb creates its files in a directory of the same name.

Go into the hello_world directory with the command cd hello_world. You’ll see that Scarb has generated three files and two directory for us: a Scarb.toml file, a src directory with a lib.cairo file inside and a tests directory containing a test_contract.cairo file. For now, we can remove this tests directory.

It has also initialized a new Git repository along with a .gitignore file

Note: Git is a common version control system. You can stop using version control system by using the

--no-vcsflag. Runscarb new --helpto see the available options.

Open Scarb.toml in your text editor of choice. It should look similar to the code in Listing 1-1.

Filename: Scarb.toml

[package]

name = "hello_world"

version = "0.1.0"

edition = "2024_07"

# See more keys and their definitions at https://docs.swmansion.com/scarb/docs/reference/manifest.html

[dependencies]

starknet = "2.11.4"

[dev-dependencies]

snforge_std = "0.39.0"

assert_macros = "2.11.4"

[[target.starknet-contract]]

sierra = true

[scripts]

test = "snforge test"

# ...

Listing 1-1: Contents of Scarb.toml generated by scarb new

This file is in the TOML (Tom’s Obvious, Minimal Language) format, which is Scarb’s configuration format.

The first line, [package], is a section heading that indicates that the following statements are configuring a package. As we add more information to this file, we’ll add other sections.

The next three lines set the configuration information Scarb needs to compile your program: the name of the package and the version of Scarb to use, and the edition of the prelude to use. The prelude is the collection of the most commonly used items that are automatically imported into every Cairo program. You can learn more about the prelude in Appendix D.

The [dependencies] section, is the start of a section for you to list any of your project’s dependencies. In Cairo, packages of code are referred to as crates. We won’t need any other crates for this project.

The [dev-dependencies] section is about dependencies that are required for development, but are not needed for the actual production build of the project. snforge_std and assert_macros are two examples of such dependencies. If you want to test your project without using Starknet Foundry, you can use cairo_test.

The [[target.starknet-contract]] section allows to build Starknet smart contracts. We can remove it for now.

The [script] section allows to define custom scripts. By default, there is one script for running tests using snforge with the scarb test command. We can also remove it for now.

Starknet Foundry also have more options, check out Starknet Foundry documentation for more information.

By default, using Starknet Foundry adds the starknet dependency and the [[target.starknet-contract]] section, so that you can build contracts for Starknet out of the box. We will start with only Cairo programs, so you can edit your Scarb.toml file to the following:

Filename: Scarb.toml

[package]

name = "hello_world"

version = "0.1.0"

edition = "2024_07"

[dependencies]

Listing 1-2: Contents of modified Scarb.toml

The other file created by Scarb is src/lib.cairo, let's delete all the content and put in the following content, we will explain the reason later.

mod hello_world;

Then create a new file called src/hello_world.cairo and put the following code in it:

Filename: src/hello_world.cairo

fn main() {

println!("Hello, World!");

}

We have just created a file called lib.cairo, which contains a module declaration referencing another module named hello_world, as well as the file hello_world.cairo, containing the implementation details of the hello_world module.

Scarb requires your source files to be located within the src directory.

The top-level project directory is reserved for README files, license information, configuration files, and any other non-code-related content. Scarb ensures a designated location for all project components, maintaining a structured organization.

If you started a project that doesn’t use Scarb, you can convert it to a project that does use Scarb. Move the project code into the src directory and create an appropriate Scarb.toml file. You can also use scarb init command to generate the src folder and the Scarb.toml it contains.

├── Scarb.toml

├── src

│ ├── lib.cairo

│ └── hello_world.cairo

A sample Scarb project structure

Building a Scarb Project

From your hello_world directory, build your project by entering the following command:

$ scarb build

Compiling hello_world v0.1.0 (listings/ch01-getting-started/no_listing_01_hello_world/Scarb.toml)

Finished `dev` profile target(s) in 8 seconds

This command creates a hello_world.sierra.json file in target/dev, let's ignore the sierra file for now.

If you have installed Cairo correctly, you should be able to run the main function of your program with the scarb cairo-run command and see the following output:

$ scarb cairo-run

Compiling hello_world v0.1.0 (listings/ch01-getting-started/no_listing_01_hello_world/Scarb.toml)

Finished `dev` profile target(s) in 15 seconds

Running hello_world

Hello, World!

Run completed successfully, returning []

Regardless of your operating system, the string Hello, world! should be printed to

the terminal.

If Hello, world! did print, congratulations! You’ve officially written a Cairo

program. That makes you a Cairo programmer — welcome!

Anatomy of a Cairo Program

Let’s review this “Hello, world!” program in detail. Here’s the first piece of the puzzle:

fn main() {

}

These lines define a function named main. The main function is special: it

is always the first code that runs in every executable Cairo program. Here, the

first line declares a function named main that has no parameters and returns

nothing. If there were parameters, they would go inside the parentheses ().

The function body is wrapped in {}. Cairo requires curly brackets around all

function bodies. It’s good style to place the opening curly bracket on the same

line as the function declaration, adding one space in between.

Note: If you want to stick to a standard style across Cairo projects, you can use the automatic formatter tool available with

scarb fmtto format your code in a particular style (more onscarb fmtin Appendix F). The Cairo team has included this tool with the standard Cairo distribution, ascairo-runis, so it should already be installed on your computer!

The body of the main function holds the following code:

println!("Hello, World!");

This line does all the work in this little program: it prints text to the screen. There are four important details to notice here.

First, Cairo style is to indent with four spaces, not a tab.

Second, println! calls a Cairo macro. If it had called a function instead, it would be entered as println (without the !).

We’ll discuss Cairo macros in more detail in the "Macros" chapter. For now, you just need to know that using a ! means that you’re calling a macro instead of a normal function and that macros don’t always follow the same rules as functions.

Third, you see the "Hello, world!" string. We pass this string as an argument to println!, and the string is printed to the screen.

Fourth, we end the line with a semicolon (;), which indicates that this

expression is over and the next one is ready to begin. Most lines of Cairo code

end with a semicolon.

Summary

Let’s recap what we’ve learned so far about Scarb:

- We can install one or multiple Scarb versions, either the latest stable or a specific one, using asdf.

- We can create a project using

scarb new. - We can build a project using

scarb buildto generate the compiled Sierra code. - We can execute a Cairo program using the

scarb cairo-runcommand.

An additional advantage of using Scarb is that the commands are the same no matter which operating system you’re working on. So, at this point, we’ll no longer provide specific instructions for Linux and macOS versus Windows.

You’re already off to a great start on your Cairo journey! This is a great time to build a more substantial program to get used to reading and writing Cairo code.

Proving That A Number Is Prime

Let’s dive into Cairo by working through a hands-on project together! This section introduces you to key Cairo concepts and the process of generating zero-knowledge proofs locally, a powerful feature enabled by Cairo in combination with the Stwo prover. You’ll learn about functions, control flow, executable targets, Scarb workflows, and how to prove a statement — all while practicing the fundamentals of Cairo programming. In later chapters, we’ll explore these ideas in more depth.

For this project, we’ll implement a classic mathematical problem suited for zero-knowledge proofs: proving that a number is prime. This is the ideal project to introduce you to the concept of zero-knowledge proofs in Cairo, because while finding prime numbers is a complex task, proving that a number is prime is straightforward.

Here’s how it works: the program will take an input number from the user and check whether it’s prime using a trial division algorithm. Then, we’ll use Scarb to execute the program and generate a proof that the primality check was performed correctly, so that anyone can verify your proof to trust that you found a prime number. The user will input a number, and we’ll output whether it’s prime, followed by generating and verifying a proof.

Setting Up a New Project

To get started, ensure you have Scarb 2.11.4 or later installed (see Installation for details). We’ll use Scarb to create and manage our Cairo project.

Open a terminal in your projects directory and create a new Scarb project:

scarb new prime_prover

cd prime_prover

The scarb new command creates a new directory called prime_prover with a basic project structure. Let’s examine the generated Scarb.toml file:

Filename: Scarb.toml

[package]

name = "prime_prover"

version = "0.1.0"

edition = "2024_07"

[dependencies]

[dev-dependencies]

cairo_test = "2.11.4"

This is a minimal manifest file for a Cairo project. However, since we want to create an executable program that we can prove, we need to modify it. Update Scarb.toml to define an executable target and include the cairo_execute plugin:

Filename: Scarb.toml

[package]

name = "prime_prover"

version = "0.1.0"

edition = "2024_07"

[[target.executable]]

[cairo]

enable-gas = false

[dependencies]

cairo_execute = "2.11.4"

Here’s what we’ve added:

[[target.executable]]specifies that this package compiles to a Cairo executable (not a library or Starknet contract).[cairo] enable-gas = falsedisables gas tracking, which is required for executable targets since gas is specific to Starknet contracts.[dependencies] cairo_execute = "2.11.4"adds the plugin needed to execute and prove our program.

Now, check the generated src/lib.cairo, which is a simple placeholder. Since we’re building an executable, we’ll replace this with a function annotated with #[executable] to define our entry point.

Writing the Prime-Checking Logic

Let’s write a program to check if a number is prime. A number is prime if it’s greater than 1 and divisible only by 1 and itself. We’ll implement a simple trial division algorithm and mark it as executable. Replace the contents of src/lib.cairo with the following:

Filename: src/lib.cairo

/// Checks if a number is prime

///

/// # Arguments

///

/// * `n` - The number to check

///

/// # Returns

///

/// * `true` if the number is prime

/// * `false` if the number is not prime

fn is_prime(n: u32) -> bool {

if n <= 1 {

return false;

}

if n == 2 {

return true;

}

if n % 2 == 0 {

return false;

}

let mut i = 3;

let mut is_prime = true;

loop {

if i * i > n {

break;

}

if n % i == 0 {

is_prime = false;

break;

}

i += 2;

}

is_prime

}

// Executable entry point

#[executable]

fn main(input: u32) -> bool {

is_prime(input)

}

Let’s break this down:

The is_prime function:

- Takes a

u32input (an unsigned 32-bit integer) and returns abool. - Checks edge cases: numbers ≤ 1 are not prime, 2 is prime, even numbers > 2 are not prime.

- Uses a loop to test odd divisors up to the square root of

n. If no divisors are found, the number is prime.

The main function:

- Marked with

#[executable], indicating it’s the entry point for our program. - Takes a u32 input from the user and returns a bool indicating whether it’s prime.

- Calls is_prime to perform the check.

This is a straightforward implementation, but it’s perfect for demonstrating proving in Cairo.

Executing the Program

Now let’s run the program with Scarb to test it. Use the scarb execute command and provide an input number as an argument:

scarb execute -p prime_prover --print-program-output --arguments 17

-p prime_proverspecifies the package name (matches Scarb.toml).--print-program-outputdisplays the result.--arguments 17passes the number 17 as input.

You should see output like this:

$ scarb execute -p prime_prover --print-program-output --arguments 17

Compiling prime_prover v0.1.0 (listings/ch01-getting-started/prime_prover/Scarb.toml)

Finished `dev` profile target(s) in 2 seconds

Executing prime_prover

Program output:

0

1

Saving output to: target/execute/prime_prover/execution2

The output represents whether the program executed successfully and the result of the program. Here, 0 indicates success (no panic), and 1 represents true (17 is prime). Try a few more numbers:

$ scarb execute -p prime_prover --print-program-output --arguments 4

[0, 0] # 4 is not prime

$ scarb execute -p prime_prover --print-program-output --arguments 23

[0, 1] # 23 is prime

The execution creates a folder under ./target/execute/prime_prover/execution1/ containing files like air_public_input.json, air_private_input.json, trace.bin, and memory.bin. These are the artifacts needed for proving.

Generating a Zero-Knowledge Proof

Now for the exciting part: proving that the primality check was computed correctly without revealing the input! Cairo 2.10 integrates the Stwo prover via Scarb, allowing us to generate a proof directly. Run:

$ scarb prove --execution-id 1

Proving prime_prover

warn: soundness of proof is not yet guaranteed by Stwo, use at your own risk

Saving proof to: target/execute/prime_prover/execution1/proof/proof.json

--execution_id 1 points to the first execution (from the execution1 folder).

This command generates a proof.json file in ./target/execute/prime_prover/execution1/proof/. The proof demonstrates that the program executed correctly for some input, producing a true or false output.

Verifying the Proof

To ensure the proof is valid, verify it with:

$ scarb verify --execution-id 1

Verifying prime_prover

Verified proof successfully

If successful, you’ll see a confirmation message. This verifies that the computation (primality check) was performed correctly, aligning with the public inputs, without needing to re-run the program.

Improving the Program: Handling Input Errors

Currently, our program assumes the input is a valid u32. What if we want to handle larger numbers or invalid inputs? Cairo’s u32 has a maximum value of 2^32 - 1 (4,294,967,295), and inputs must be provided as integers. Let’s modify the program to use u128 and add a basic check. Update src/lib.cairo:

Filename: src/lib.cairo

/// Checks if a number is prime

///

/// # Arguments

///

/// * `n` - The number to check

///

/// # Returns

///

/// * `true` if the number is prime

/// * `false` if the number is not prime

fn is_prime(n: u128) -> bool {

if n <= 1 {

return false;

}

if n == 2 {

return true;

}

if n % 2 == 0 {

return false;

}

let mut i = 3;

let mut is_prime = true;

loop {

if i * i > n {

break;

}

if n % i == 0 {

is_prime = false;

break;

}

i += 2;

}

is_prime

}

#[executable]

fn main(input: u128) -> bool {

if input > 1000000 { // Arbitrary limit for demo purposes

panic!("Input too large, must be <= 1,000,000");

}

is_prime(input)

}

Changed u32 to u128 for a larger range (up to 2^128 - 1).

Added a check to panic if the input exceeds 1,000,000 (for simplicity; adjust as needed).

Test it:

$ scarb execute -p prime_prover --print-program-output --arguments 1000001

Compiling prime_prover v0.1.0 (listings/ch01-getting-started/prime_prover2/Scarb.toml)

Finished `dev` profile target(s) in 2 seconds

Executing prime_prover

Program output:

1

Saving output to: target/execute/prime_prover/execution2

error: Panicked with "Input too large, must be <= 1,000,000".

If we pass a number greater than 1,000,000, the program will panic - and thus, no proof can be generated. As such, it's not possible to verify a proof for a panicked execution.

Summary

Congratulations! You’ve built a Cairo program to check primality, executed it with Scarb, and generated and verified a zero-knowledge proof using the Stwo prover. This project introduced you to:

- Defining executable targets in Scarb.toml.

- Writing functions and control flow in Cairo.

- Using

scarb executeto run programs and generate execution traces. - Proving and verifying computations with

scarb proveandscarb verify.

In the next chapters, you’ll dive deeper into Cairo’s syntax (Chapter 2), ownership (Chapter 4), and other features. For now, experiment with different inputs or modify the primality check — can you optimize it further?

Common Programming Concepts

This chapter covers concepts that appear in almost every programming language and how they work in Cairo. Many programming languages have much in common at their core. None of the concepts presented in this chapter are unique to Cairo, but we’ll discuss them in the context of Cairo and explain the conventions around using these concepts.

Specifically, you’ll learn about variables, basic types, functions, comments, and control flow. These foundations will be in every Cairo program, and learning them early will give you a strong core to start from.

Variables and Mutability

Cairo uses an immutable memory model, meaning that once a memory cell is written to, it can't be overwritten but only read from. To reflect this immutable memory model, variables in Cairo are immutable by default. However, the language abstracts this model and gives you the option to make your variables mutable. Let’s explore how and why Cairo enforces immutability, and how you can make your variables mutable.

When a variable is immutable, once a value is bound to a name, you can’t change

that value. To illustrate this, generate a new project called variables in

your cairo_projects directory by using scarb new variables.

Then, in your new variables directory, open src/lib.cairo and replace its code with the following code, which won’t compile just yet:

Filename: src/lib.cairo

fn main() {

let x = 5;

println!("The value of x is: {}", x);

x = 6;

println!("The value of x is: {}", x);

}

Save and run the program using scarb cairo-run. You should receive an error message

regarding an immutability error, as shown in this output:

$ scarb cairo-run

Compiling no_listing_01_variables_are_immutable v0.1.0 (listings/ch02-common-programming-concepts/no_listing_01_variables_are_immutable/Scarb.toml)

error: Cannot assign to an immutable variable.

--> listings/ch02-common-programming-concepts/no_listing_01_variables_are_immutable/src/lib.cairo:6:5

x = 6;

^***^

error: could not compile `no_listing_01_variables_are_immutable` due to previous error

error: `scarb metadata` exited with error

This example shows how the compiler helps you find errors in your programs. Compiler errors can be frustrating, but they only mean your program isn’t safely doing what you want it to do yet; they do not mean that you’re not a good programmer! Experienced Caironautes still get compiler errors.

You received the error message Cannot assign to an immutable variable.

because you tried to assign a second value to the immutable x variable.

It’s important that we get compile-time errors when we attempt to change a value that’s designated as immutable because this specific situation can lead to bugs. If one part of our code operates on the assumption that a value will never change and another part of our code changes that value, it’s possible that the first part of the code won’t do what it was designed to do. The cause of this kind of bug can be difficult to track down after the fact, especially when the second piece of code changes the value only sometimes.

Cairo, unlike most other languages, has immutable memory. This makes a whole class of bugs impossible, because values will never change unexpectedly. This makes code easier to reason about.

But mutability can be very useful, and can make code more convenient to write.

Although variables are immutable by default, you can make them mutable by

adding mut in front of the variable name. Adding mut also conveys

intent to future readers of the code by indicating that other parts of the code

will be changing the value associated to this variable.

However, you might be wondering at this point what exactly happens when a variable

is declared as mut, as we previously mentioned that Cairo's memory is immutable.

The answer is that the value is immutable, but the variable isn't. The value

associated to the variable can be changed. Assigning to a mutable variable in Cairo

is essentially equivalent to redeclaring it to refer to another value in another memory cell,

but the compiler handles that for you, and the keyword mut makes it explicit.

Upon examining the low-level Cairo Assembly code, it becomes clear that

variable mutation is implemented as syntactic sugar, which translates mutation operations

into a series of steps equivalent to variable shadowing. The only difference is that at the Cairo

level, the variable is not redeclared so its type cannot change.

For example, let’s change src/lib.cairo to the following:

fn main() {

let mut x = 5;

println!("The value of x is: {}", x);

x = 6;

println!("The value of x is: {}", x);

}

When we run the program now, we get this:

$ scarb cairo-run

Compiling no_listing_02_adding_mut v0.1.0 (listings/ch02-common-programming-concepts/no_listing_02_adding_mut/Scarb.toml)

Finished `dev` profile target(s) in 4 seconds

Running no_listing_02_adding_mut

The value of x is: 5

The value of x is: 6

Run completed successfully, returning []

We’re allowed to change the value bound to x from 5 to 6 when mut is

used. Ultimately, deciding whether to use mutability or not is up to you and

depends on what you think is clearest in that particular situation.

Constants

Like immutable variables, constants are values that are bound to a name and are not allowed to change, but there are a few differences between constants and variables.

First, you aren’t allowed to use mut with constants. Constants aren’t just

immutable by default—they’re always immutable. You declare constants using the

const keyword instead of the let keyword, and the type of the value must

be annotated. We’ll cover types and type annotations in the next section,

“Data Types”, so don’t worry about the details

right now. Just know that you must always annotate the type.

Constant variables can be declared with any usual data type, including structs, enums and fixed-size arrays.

Constants can only be declared in the global scope, which makes them useful for values that many parts of code need to know about.

The last difference is that constants may natively be set only to a constant expression, not the result of a value that could only be computed at runtime.

Here’s an example of constants declaration:

struct AnyStruct {

a: u256,

b: u32,

}

enum AnyEnum {

A: felt252,

B: (usize, u256),

}

const ONE_HOUR_IN_SECONDS: u32 = 3600;

const STRUCT_INSTANCE: AnyStruct = AnyStruct { a: 0, b: 1 };

const ENUM_INSTANCE: AnyEnum = AnyEnum::A('any enum');

const BOOL_FIXED_SIZE_ARRAY: [bool; 2] = [true, false];

Nonetheless, it is possible to use the consteval_int! macro to create a const variable that is the result of some computation:

const ONE_HOUR_IN_SECONDS: u32 = consteval_int!(60 * 60);

We will dive into more detail about macros in the dedicated section.

Cairo's naming convention for constants is to use all uppercase with underscores between words.

Constants are valid for the entire time a program runs, within the scope in which they were declared. This property makes constants useful for values in your application domain that multiple parts of the program might need to know about, such as the maximum number of points any player of a game is allowed to earn, or the speed of light.

Naming hardcoded values used throughout your program as constants is useful in conveying the meaning of that value to future maintainers of the code. It also helps to have only one place in your code you would need to change if the hardcoded value needed to be updated in the future.

Shadowing

Variable shadowing refers to the declaration of a

new variable with the same name as a previous variable. Caironautes say that the

first variable is shadowed by the second, which means that the second

variable is what the compiler will see when you use the name of the variable.

In effect, the second variable overshadows the first, taking any uses of the

variable name to itself until either it itself is shadowed or the scope ends.

We can shadow a variable by using the same variable’s name and repeating the

use of the let keyword as follows:

fn main() {

let x = 5;

let x = x + 1;

{

let x = x * 2;

println!("Inner scope x value is: {}", x);

}

println!("Outer scope x value is: {}", x);

}

This program first binds x to a value of 5. Then it creates a new variable

x by repeating let x =, taking the original value and adding 1 so the

value of x is then 6. Then, within an inner scope created with the curly

brackets, the third let statement also shadows x and creates a new

variable, multiplying the previous value by 2 to give x a value of 12.

When that scope is over, the inner shadowing ends and x returns to being 6.

When we run this program, it will output the following:

$ scarb cairo-run

Compiling no_listing_03_shadowing v0.1.0 (listings/ch02-common-programming-concepts/no_listing_03_shadowing/Scarb.toml)

Finished `dev` profile target(s) in 4 seconds

Running no_listing_03_shadowing

Inner scope x value is: 12

Outer scope x value is: 6

Run completed successfully, returning []

Shadowing is different from marking a variable as mut because we’ll get a

compile-time error if we accidentally try to reassign to this variable without

using the let keyword. By using let, we can perform a few transformations

on a value but have the variable be immutable after those transformations have

been completed.

Another distinction between mut and shadowing is that when we use the let keyword again,

we are effectively creating a new variable, which allows us to change the type of the

value while reusing the same name. As mentioned before, variable shadowing and mutable variables

are equivalent at the lower level.

The only difference is that by shadowing a variable, the compiler will not complain

if you change its type. For example, say our program performs a type conversion between the

u64 and felt252 types.

fn main() {

let x: u64 = 2;

println!("The value of x is {} of type u64", x);

let x: felt252 = x.into(); // converts x to a felt, type annotation is required.

println!("The value of x is {} of type felt252", x);

}

The first x variable has a u64 type while the second x variable has a felt252 type.

Shadowing thus spares us from having to come up with different names, such as x_u64

and x_felt252; instead, we can reuse the simpler x name. However, if we try to use

mut for this, as shown here, we’ll get a compile-time error:

fn main() {

let mut x: u64 = 2;

println!("The value of x is: {}", x);

x = 5_u8;

println!("The value of x is: {}", x);

}

The error says we were expecting a u64 (the original type) but we got a different type:

$ scarb cairo-run

Compiling no_listing_05_mut_cant_change_type v0.1.0 (listings/ch02-common-programming-concepts/no_listing_05_mut_cant_change_type/Scarb.toml)

error: Unexpected argument type. Expected: "core::integer::u64", found: "core::integer::u8".

--> listings/ch02-common-programming-concepts/no_listing_05_mut_cant_change_type/src/lib.cairo:6:9

x = 5_u8;

^**^

error: could not compile `no_listing_05_mut_cant_change_type` due to previous error

error: `scarb metadata` exited with error

Now that we’ve explored how variables work, let’s look at more data types they can have.

Data Types

Every value in Cairo is of a certain data type, which tells Cairo what kind of data is being specified so it knows how to work with that data. This section covers two subsets of data types: scalars and compounds.

Keep in mind that Cairo is a statically typed language, which means that it must know the types of all variables at compile time. The compiler can usually infer the desired type based on the value and its usage. In cases when many types are possible, we can use a conversion method where we specify the desired output type.

fn main() {

let x: felt252 = 3;

let y: u32 = x.try_into().unwrap();

}

You’ll see different type annotations for other data types.

Scalar Types

A scalar type represents a single value. Cairo has three primary scalar types: felts, integers, and booleans. You may recognize these from other programming languages. Let’s jump into how they work in Cairo.

Felt Type

In Cairo, if you don't specify the type of a variable or argument, its type defaults to a field element, represented by the keyword felt252. In the context of Cairo, when we say “a field element” we mean an integer in the range \( 0 \leq x < P \),

where \( P \) is a very large prime number currently equal to \( {2^{251}} + 17 \cdot {2^{192}} + 1 \). When adding, subtracting, or multiplying, if the result falls outside the specified range of the prime number, an overflow (or underflow) occurs, and an appropriate multiple of \( P \) is added or subtracted to bring the result back within the range (i.e., the result is computed \( \mod P \) ).

The most important difference between integers and field elements is division: Division of field elements (and therefore division in Cairo) is unlike regular CPUs division, where

integer division \( \frac{x}{y} \) is defined as \( \left\lfloor \frac{x}{y} \right\rfloor \)

where the integer part of the quotient is returned (so you get \( \frac{7}{3} = 2 \)) and it may or may not satisfy the equation \( \frac{x}{y} \cdot y == x \),

depending on the divisibility of x by y.

In Cairo, the result of \( \frac{x}{y} \) is defined to always satisfy the equation \( \frac{x}{y} \cdot y == x \). If y divides x as integers, you will get the expected result in Cairo (for example \( \frac{6}{2} \) will indeed result in 3).

But when y does not divide x, you may get a surprising result: for example, since \( 2 \cdot \frac{P + 1}{2} = P + 1 \equiv 1 \mod P \), the value of \( \frac{1}{2} \) in Cairo is \( \frac{P + 1}{2} \) (and not 0 or 0.5), as it satisfies the above equation.

Integer Types

The felt252 type is a fundamental type that serves as the basis for creating all types in the core library.

However, it is highly recommended for programmers to use the integer types instead of the felt252 type whenever possible, as the integer types come with added security features that provide extra protection against potential vulnerabilities in the code, such as overflow and underflow checks. By using these integer types, programmers can ensure that their programs are more secure and less susceptible to attacks or other security threats.

An integer is a number without a fractional component. This type declaration indicates the number of bits the programmer can use to store the integer.

Table 3-1 shows the built-in integer types in Cairo. We can use any of these variants to declare the type of an integer value.

| Length | Unsigned |

|---|---|

| 8-bit | u8 |

| 16-bit | u16 |

| 32-bit | u32 |

| 64-bit | u64 |

| 128-bit | u128 |

| 256-bit | u256 |

| 32-bit | usize |

Each variant has an explicit size. Note that for now, the usize type is just an alias for u32; however, it might be useful when in the future Cairo can be compiled to MLIR.

As variables are unsigned, they can't contain a negative number. This code will cause the program to panic:

fn sub_u8s(x: u8, y: u8) -> u8 {

x - y

}

fn main() {

sub_u8s(1, 3);

}

All integer types previously mentioned fit into a felt252, except for u256 which needs 4 more bits to be stored. Under the hood, u256 is basically a struct with 2 fields: u256 {low: u128, high: u128}.

Cairo also provides support for signed integers, starting with the prefix i. These integers can represent both positive and negative values, with sizes ranging from i8 to i128.

Each signed variant can store numbers from \( -({2^{n - 1}}) \) to \( {2^{n - 1}} - 1 \) inclusive, where n is the number of bits that variant uses. So an i8 can store numbers from \( -({2^7}) \) to \( {2^7} - 1 \), which equals -128 to 127.

You can write integer literals in any of the forms shown in Table 3-2. Note

that number literals that can be multiple numeric types allow a type suffix,

such as 57_u8, to designate the type.

It is also possible to use a visual separator _ for number literals, in order to improve code readability.

| Numeric literals | Example |

|---|---|

| Decimal | 98222 |

| Hex | 0xff |

| Octal | 0o04321 |

| Binary | 0b01 |

So how do you know which type of integer to use? Try to estimate the max value your int can have and choose the good size.

The primary situation in which you’d use usize is when indexing some sort of collection.

Numeric Operations

Cairo supports the basic mathematical operations you’d expect for all the integer

types: addition, subtraction, multiplication, division, and remainder. Integer

division truncates toward zero to the nearest integer. The following code shows

how you’d use each numeric operation in a let statement:

fn main() {

// addition

let sum = 5_u128 + 10_u128;

// subtraction

let difference = 95_u128 - 4_u128;

// multiplication

let product = 4_u128 * 30_u128;

// division

let quotient = 56_u128 / 32_u128; //result is 1

let quotient = 64_u128 / 32_u128; //result is 2

// remainder

let remainder = 43_u128 % 5_u128; // result is 3

}

Each expression in these statements uses a mathematical operator and evaluates to a single value, which is then bound to a variable.

Appendix B contains a list of all operators that Cairo provides.

The Boolean Type

As in most other programming languages, a Boolean type in Cairo has two possible

values: true and false. Booleans are one felt252 in size. The Boolean type in

Cairo is specified using bool. For example:

fn main() {

let t = true;

let f: bool = false; // with explicit type annotation

}

When declaring a bool variable, it is mandatory to use either true or false literals as value. Hence, it is not allowed to use integer literals (i.e. 0 instead of false) for bool declarations.

The main way to use Boolean values is through conditionals, such as an if

expression. We’ll cover how if expressions work in Cairo in the "Control Flow" section.

String Types

Cairo doesn't have a native type for strings but provides two ways to handle them: short strings using simple quotes and ByteArray using double quotes.

Short strings

A short string is an ASCII string where each character is encoded on one byte (see the ASCII table). For example:

'a'is equivalent to0x61'b'is equivalent to0x62'c'is equivalent to0x630x616263is equivalent to'abc'.

Cairo uses the felt252 for short strings. As the felt252 is on 251 bits, a short string is limited to 31 characters (31 * 8 = 248 bits, which is the maximum multiple of 8 that fits in 251 bits).

You can choose to represent your short string with an hexadecimal value like 0x616263 or by directly writing the string using simple quotes like 'abc', which is more convenient.

Here are some examples of declaring short strings in Cairo:

fn main() {

let my_first_char = 'C';

let my_first_char_in_hex = 0x43;

let my_first_string = 'Hello world';

let my_first_string_in_hex = 0x48656C6C6F20776F726C64;

let long_string: ByteArray = "this is a string which has more than 31 characters";

}

Byte Array Strings

Cairo's Core Library provides a ByteArray type for handling strings and byte sequences longer than short strings. This type is particularly useful for longer strings or when you need to perform operations on the string data.

The ByteArray in Cairo is implemented as a combination of two parts:

- An array of

bytes31words, where each word contains 31 bytes of data. - A pending

felt252word that acts as a buffer for bytes that haven't yet filled a completebytes31word.

This design enables efficient handling of byte sequences while aligning with Cairo's memory model and basic types. Developers interact with ByteArray through its provided methods and operators, abstracting away the internal implementation details.

Unlike short strings, ByteArray strings can contain more than 31 characters and are written using double quotes:

fn main() {

let my_first_char = 'C';

let my_first_char_in_hex = 0x43;

let my_first_string = 'Hello world';

let my_first_string_in_hex = 0x48656C6C6F20776F726C64;

let long_string: ByteArray = "this is a string which has more than 31 characters";

}

Compound Types

The Tuple Type

A tuple is a general way of grouping together a number of values with a variety of types into one compound type. Tuples have a fixed length: once declared, they cannot grow or shrink in size.

We create a tuple by writing a comma-separated list of values inside parentheses. Each position in the tuple has a type, and the types of the different values in the tuple don’t have to be the same. We’ve added optional type annotations in this example:

fn main() {

let tup: (u32, u64, bool) = (10, 20, true);

}

The variable tup binds to the entire tuple because a tuple is considered a

single compound element. To get the individual values out of a tuple, we can

use pattern matching to destructure a tuple value, like this:

fn main() {

let tup = (500, 6, true);

let (x, y, z) = tup;

if y == 6 {

println!("y is 6!");

}

}

This program first creates a tuple and binds it to the variable tup. It then

uses a pattern with let to take tup and turn it into three separate

variables, x, y, and z. This is called destructuring because it breaks

the single tuple into three parts. Finally, the program prints y is 6! as the value of

y is 6.

We can also declare the tuple with value and types, and destructure it at the same time. For example:

fn main() {

let (x, y): (felt252, felt252) = (2, 3);

}

The Unit Type ()

A unit type is a type which has only one value ().

It is represented by a tuple with no elements.

Its size is always zero, and it is guaranteed to not exist in the compiled code.

You might be wondering why you would even need a unit type? In Cairo, everything is an expression, and an expression that returns nothing actually returns () implicitly.

The Fixed Size Array Type

Another way to have a collection of multiple values is with a fixed size array. Unlike a tuple, every element of a fixed size array must have the same type.

We write the values in a fixed-size array as a comma-separated list inside square brackets. The array’s type is written using square brackets with the type of each element, a semicolon, and then the number of elements in the array, like so:

fn main() {

let arr1: [u64; 5] = [1, 2, 3, 4, 5];

}

In the type annotation [u64; 5], u64 specifies the type of each element, while 5 after the semicolon defines the array's length. This syntax ensures that the array always contains exactly 5 elements of type u64.

Fixed size arrays are useful when you want to hardcode a potentially long sequence of data directly in your program. This type of array must not be confused with the Array<T> type, which is a similar collection type provided by the core library that is allowed to grow in size. If you're unsure whether to use a fixed size array or the Array<T> type, chances are that you are looking for the Array<T> type.

Because their size is known at compile-time, fixed-size arrays don't require runtime memory management, which makes them more efficient than dynamically-sized arrays. Overall, they're more useful when you know the number of elements will not need to change. For example, they can be used to efficiently store lookup tables that won't change during runtime. If you were using the names of the month in a program, you would probably use a fixed size array rather than an Array<T> because you know it will always contain 12 elements:

let months = [

'January', 'February', 'March', 'April', 'May', 'June', 'July', 'August', 'September',

'October', 'November', 'December',

];

You can also initialize an array to contain the same value for each element by specifying the initial value, followed by a semicolon, and then the length of the array in square brackets, as shown here:

let a = [3; 5];

The array named a will contain 5 elements that will all be set to the value 3 initially. This is the same as writing let a = [3, 3, 3, 3, 3]; but in a more concise way.

Accessing Fixed Size Arrays Elements

As a fixed-size array is a data structure known at compile time, it's content is represented as a sequence of values in the program bytecode. Accessing an element of that array will simply read that value from the program bytecode efficiently.

We have two different ways of accessing fixed size array elements:

- Deconstructing the array into multiple variables, as we did with tuples.

fn main() {

let my_arr = [1, 2, 3, 4, 5];

// Accessing elements of a fixed-size array by deconstruction

let [a, b, c, _, _] = my_arr;

println!("c: {}", c); // c: 3

}

- Converting the array to a Span, that supports indexing. This operation is free and doesn't incur any runtime cost.

fn main() {

let my_arr = [1, 2, 3, 4, 5];

// Accessing elements of a fixed-size array by index

let my_span = my_arr.span();

println!("my_span[2]: {}", my_span[2]); // my_span[2]: 3

}

Note that if we plan to repeatedly access the array, then it makes sense to call .span() only once and keep it available throughout the accesses.

Type Conversion

Cairo addresses conversion between types by using the try_into and into methods provided by the TryInto and Into traits from the core library. There are numerous implementations of these traits within the standard library for conversion between types, and they can be implemented for custom types as well.

Into

The Into trait allows for a type to define how to convert itself into another type. It can be used for type conversion when success is guaranteed, such as when the source type is smaller than the destination type.

To perform the conversion, call var.into() on the source value to convert it to another type. The new variable's type must be explicitly defined, as demonstrated in the example below.

fn main() {

let my_u8: u8 = 10;

let my_u16: u16 = my_u8.into();

let my_u32: u32 = my_u16.into();

let my_u64: u64 = my_u32.into();

let my_u128: u128 = my_u64.into();

let my_felt252 = 10;

// As a felt252 is smaller than a u256, we can use the into() method

let my_u256: u256 = my_felt252.into();

let my_other_felt252: felt252 = my_u8.into();

let my_third_felt252: felt252 = my_u16.into();

}

TryInto

Similar to Into, TryInto is a generic trait for converting between types. Unlike Into, the TryInto trait is used for fallible conversions, and as such, returns Option<T>. An example of a fallible conversion is when the target type might not fit the source value.

Also similar to Into is the process to perform the conversion; just call var.try_into() on the source value to convert it to another type. The new variable's type also must be explicitly defined, as demonstrated in the example below.

fn main() {

let my_u256: u256 = 10;

// Since a u256 might not fit in a felt252, we need to unwrap the Option<T> type

let my_felt252: felt252 = my_u256.try_into().unwrap();

let my_u128: u128 = my_felt252.try_into().unwrap();

let my_u64: u64 = my_u128.try_into().unwrap();

let my_u32: u32 = my_u64.try_into().unwrap();

let my_u16: u16 = my_u32.try_into().unwrap();

let my_u8: u8 = my_u16.try_into().unwrap();

let my_large_u16: u16 = 2048;

let my_large_u8: u8 = my_large_u16.try_into().unwrap(); // panics with 'Option::unwrap failed.'

}

Functions

Functions are prevalent in Cairo code. You’ve already seen one of the most

important functions in the language: the main function, which is the entry

point of many programs. You’ve also seen the fn keyword, which allows you to

declare new functions.

Cairo code uses snake case as the conventional style for function and variable names, in which all letters are lowercase and underscores separate words. Here’s a program that contains an example function definition:

fn another_function() {

println!("Another function.");

}

fn main() {

println!("Hello, world!");

another_function();

}

We define a function in Cairo by entering fn followed by a function name and a

set of parentheses. The curly brackets tell the compiler where the function

body begins and ends.

We can call any function we’ve defined by entering its name followed by a set

of parentheses. Because another_function is defined in the program, it can be

called from inside the main function. Note that we defined another_function

before the main function in the source code; we could have defined it after

as well. Cairo doesn’t care where you define your functions, only that they’re

defined somewhere in a scope that can be seen by the caller.

Let’s start a new project with Scarb named functions to explore functions

further. Place the another_function example in src/lib.cairo and run it. You

should see the following output:

$ scarb cairo-run

Compiling no_listing_15_functions v0.1.0 (listings/ch02-common-programming-concepts/no_listing_15_functions/Scarb.toml)

Finished `dev` profile target(s) in 4 seconds

Running no_listing_15_functions

Hello, world!

Another function.

Run completed successfully, returning []

The lines execute in the order in which they appear in the main function.

First the Hello, world! message prints, and then another_function is called

and its message is printed.

Parameters

We can define functions to have parameters, which are special variables that are part of a function’s signature. When a function has parameters, you can provide it with concrete values for those parameters. Technically, the concrete values are called arguments, but in casual conversation, people tend to use the words parameter and argument interchangeably for either the variables in a function’s definition or the concrete values passed in when you call a function.

In this version of another_function we add a parameter:

fn main() {

another_function(5);

}

fn another_function(x: felt252) {

println!("The value of x is: {}", x);

}

Try running this program; you should get the following output:

$ scarb cairo-run

Compiling no_listing_16_single_param v0.1.0 (listings/ch02-common-programming-concepts/no_listing_16_single_param/Scarb.toml)

Finished `dev` profile target(s) in 4 seconds

Running no_listing_16_single_param

The value of x is: 5

Run completed successfully, returning []

The declaration of another_function has one parameter named x. The type of

x is specified as felt252. When we pass 5 in to another_function, the

println! macro puts 5 where the pair of curly brackets containing x was in the format string.

In function signatures, you must declare the type of each parameter. This is a deliberate decision in Cairo’s design: requiring type annotations in function definitions means the compiler almost never needs you to use them elsewhere in the code to figure out what type you mean. The compiler is also able to give more helpful error messages if it knows what types the function expects.

When defining multiple parameters, separate the parameter declarations with commas, like this:

fn main() {

print_labeled_measurement(5, "h");

}

fn print_labeled_measurement(value: u128, unit_label: ByteArray) {

println!("The measurement is: {value}{unit_label}");

}

This example creates a function named print_labeled_measurement with two

parameters. The first parameter is named value and is a u128. The second is

named unit_label and is of type ByteArray - Cairo's internal type to represent string literals. The function then prints text containing both the value and the unit_label.

Let’s try running this code. Replace the program currently in your functions

project’s src/lib.cairo file with the preceding example and run it using scarb cairo-run:

$ scarb cairo-run

Compiling no_listing_17_multiple_params v0.1.0 (listings/ch02-common-programming-concepts/no_listing_17_multiple_params/Scarb.toml)

Finished `dev` profile target(s) in 5 seconds

Running no_listing_17_multiple_params

The measurement is: 5h

Run completed successfully, returning []

Because we called the function with 5 as the value for value and "h" as the value for unit_label, the program output contains those values.

Named Parameters

In Cairo, named parameters allow you to specify the names of arguments when you call a function. This makes the function calls more readable and self-descriptive.

If you want to use named parameters, you need to specify the name of the parameter and the value you want to pass to it. The syntax is parameter_name: value. If you pass a variable that has the same name as the parameter, you can simply write :parameter_name instead of parameter_name: variable_name.

Here is an example:

fn foo(x: u8, y: u8) {}

fn main() {

let first_arg = 3;

let second_arg = 4;

foo(x: first_arg, y: second_arg);

let x = 1;

let y = 2;

foo(:x, :y)

}

Statements and Expressions

Function bodies are made up of a series of statements optionally ending in an expression. So far, the functions we’ve covered haven’t included an ending expression, but you have seen an expression as part of a statement. Because Cairo is an expression-based language, this is an important distinction to understand. Other languages don’t have the same distinctions, so let’s look at what statements and expressions are and how their differences affect the bodies of functions.

- Statements are instructions that perform some action and do not return a value.

- Expressions evaluate to a resultant value. Let’s look at some examples.

We’ve actually already used statements and expressions. Creating a variable and

assigning a value to it with the let keyword is a statement. In Listing 2-1,

let y = 6; is a statement.

fn main() {

let y = 6;

}

Listing 2-1: A main function declaration containing one statement

Function definitions are also statements; the entire preceding example is a statement in itself.

Statements do not return values. Therefore, you can’t assign a let statement

to another variable, as the following code tries to do; you’ll get an error:

fn main() {

let x = (let y = 6);

}

When you run this program, the error you’ll get looks like this:

$ scarb cairo-run

Compiling no_listing_18_statements_dont_return_values v0.1.0 (listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/Scarb.toml)

error: Missing token TerminalRParen.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:14

let x = (let y = 6);

^

error: Missing token TerminalSemicolon.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:14

let x = (let y = 6);

^

error: Missing token TerminalSemicolon.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:23

let x = (let y = 6);

^

error: Skipped tokens. Expected: statement.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:23

let x = (let y = 6);

^^

warn[E0001]: Unused variable. Consider ignoring by prefixing with `_`.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:9

let x = (let y = 6);

^

warn[E0001]: Unused variable. Consider ignoring by prefixing with `_`.

--> listings/ch02-common-programming-concepts/no_listing_20_statements_dont_return_values/src/lib.cairo:3:18

let x = (let y = 6);

^

error: could not compile `no_listing_18_statements_dont_return_values` due to previous error

error: `scarb metadata` exited with error

The let y = 6 statement does not return a value, so there isn’t anything for

x to bind to. This is different from what happens in other languages, such as

C and Ruby, where the assignment returns the value of the assignment. In those

languages, you can write x = y = 6 and have both x and y have the value

6; that is not the case in Cairo.

Expressions evaluate to a value and make up most of the rest of the code that

you’ll write in Cairo. Consider a math operation, such as 5 + 6, which is an

expression that evaluates to the value 11. Expressions can be part of

statements: in Listing 2-1, the 6 in the statement let y = 6; is an

expression that evaluates to the value 6.

Calling a function is an expression since it always evaluates to a value: the function's explicit return value, if specified, or the 'unit' type () otherwise.

A new scope block created with curly brackets is an expression, for example:

fn main() {

let y = {

let x = 3;

x + 1

};

println!("The value of y is: {}", y);

}

This expression:

let y = {

let x = 3;

x + 1

};

is a block that, in this case, evaluates to 4. That value gets bound to y

as part of the let statement. Note that the x + 1 line doesn’t have a

semicolon at the end, which is unlike most of the lines you’ve seen so far.

Expressions do not include ending semicolons. If you add a semicolon to the end

of an expression, you turn it into a statement, and it will then not return a

value. Keep this in mind as you explore function return values and expressions

next.

Functions with Return Values

Functions can return values to the code that calls them. We don’t name return

values, but we must declare their type after an arrow (->). In Cairo, the

return value of the function is synonymous with the value of the final

expression in the block of the body of a function. You can return early from a

function by using the return keyword and specifying a value, but most

functions return the last expression implicitly. Here’s an example of a

function that returns a value:

fn five() -> u32 {

5

}

fn main() {

let x = five();

println!("The value of x is: {}", x);

}

There are no function calls, or even let statements in the five

function—just the number 5 by itself. That’s a perfectly valid function in

Cairo. Note that the function’s return type is specified too, as -> u32. Try

running this code; the output should look like this:

$ scarb cairo-run

Compiling no_listing_20_function_return_values v0.1.0 (listings/ch02-common-programming-concepts/no_listing_22_function_return_values/Scarb.toml)

Finished `dev` profile target(s) in 4 seconds

Running no_listing_20_function_return_values

The value of x is: 5

Run completed successfully, returning []

The 5 in five is the function’s return value, which is why the return type

is u32. Let’s examine this in more detail. There are two important bits:

first, the line let x = five(); shows that we’re using the return value of a

function to initialize a variable. Because the function five returns a 5,

that line is the same as the following:

let x = 5;

Second, the five function has no parameters and defines the type of the

return value, but the body of the function is a lonely 5 with no semicolon

because it’s an expression whose value we want to return.

Let’s look at another example:

fn main() {

let x = plus_one(5);

println!("The value of x is: {}", x);

}

fn plus_one(x: u32) -> u32 {

x + 1

}

Running this code will print x = 6. But if we place a

semicolon at the end of the line containing x + 1, changing it from an

expression to a statement, we’ll get an error:

fn main() {

let x = plus_one(5);

println!("The value of x is: {}", x);

}

fn plus_one(x: u32) -> u32 {

x + 1;

}

$ scarb cairo-run

Compiling no_listing_22_function_return_invalid v0.1.0 (listings/ch02-common-programming-concepts/no_listing_24_function_return_invalid/Scarb.toml)

error: Unexpected return type. Expected: "core::integer::u32", found: "()".

--> listings/ch02-common-programming-concepts/no_listing_24_function_return_invalid/src/lib.cairo:9:28

fn plus_one(x: u32) -> u32 {

^

error: could not compile `no_listing_22_function_return_invalid` due to previous error

error: `scarb metadata` exited with error

The main error message, Unexpected return type, reveals the core issue with this

code. The definition of the function plus_one says that it will return an

u32, but statements don’t evaluate to a value, which is expressed by (),

the unit type. Therefore, nothing is returned, which contradicts the function

definition and results in an error.

Const Functions

Functions that can be evaluated at compile time can be marked as const using the const fn syntax. This allows the function to be called from a constant context and interpreted by the compiler at compile time.

Declaring a function as const restricts the types that arguments and the return type may use, and limits the function body to constant expressions.

Several functions in the core library are marked as const. Here's an example from the core library showing the pow function implemented as a const fn:

use core::num::traits::Pow;

const BYTE_MASK: u16 = 2_u16.pow(8) - 1;

fn main() {

let my_value = 12345;

let first_byte = my_value & BYTE_MASK;

println!("first_byte: {}", first_byte);

}

In this example, pow is a const function, allowing it to be used in a constant expression to define mask at compile time. Here's a snippet of how pow is defined in the core library using const fn:

Note that declaring a function as const has no effect on existing uses; it only imposes restrictions for constant contexts.

Comments

All programmers strive to make their code easy to understand, but sometimes extra explanation is warranted. In these cases, programmers leave comments in their source code that the compiler will ignore but people reading the source code may find useful.

Here’s a simple comment:

// hello, world

In Cairo, the idiomatic comment style starts a comment with two slashes, and the comment continues until the end of the line. For comments that extend beyond a single line, you’ll need to include // on each line, like this:

// So we’re doing something complicated here, long enough that we need

// multiple lines of comments to do it! Whew! Hopefully, this comment will

// explain what’s going on.

Comments can also be placed at the end of lines containing code:

fn main() -> felt252 {

1 + 4 // return the sum of 1 and 4

}

But you’ll more often see them used in this format, with the comment on a separate line above the code it’s annotating:

fn main() -> felt252 {

// this function performs a simple addition

1 + 4

}

Item-level Documentation

Item-level documentation comments refer to specific items such as functions, implementations, traits, etc. They are prefixed with three slashes (///). These comments provide a detailed description of the item, examples of usage, and any conditions that might cause a panic. In case of functions, the comments may also include separate sections for parameter and return value descriptions.

/// Returns the sum of `arg1` and `arg2`.

/// `arg1` cannot be zero.

///

/// # Panics

///

/// This function will panic if `arg1` is `0`.

///

/// # Examples

///

/// ```

/// let a: felt252 = 2;

/// let b: felt252 = 3;

/// let c: felt252 = add(a, b);

/// assert(c == a + b, "Should equal a + b");

/// ```

fn add(arg1: felt252, arg2: felt252) -> felt252 {

assert(arg1 != 0, 'Cannot be zero');

arg1 + arg2

}

Module Documentation

Module documentation comments provide an overview of the entire module, including its purpose and examples of use. These comments are meant to be placed above the module they're describing and are prefixed with //!. This type of documentation gives a broad understanding of what the module does and how it can be used.

//! # my_module and implementation

//!

//! This is an example description of my_module and some of its features.

//!

//! # Examples

//!

//! ```

//! mod my_other_module {

//! use path::to::my_module;

//!

//! fn foo() {

//! my_module.bar();

//! }

//! }

//! ```

mod my_module { // rest of implementation...

}

Control Flow

The ability to run some code depending on whether a condition is true and to run some code repeatedly while a condition is true are basic building blocks in most programming languages. The most common constructs that let you control the flow of execution of Cairo code are if expressions and loops.

if Expressions

An if expression allows you to branch your code depending on conditions. You provide a condition and then state, “If this condition is met, run this block of code. If the condition is not met, do not run this block of code.”

Create a new project called branches in your cairo_projects directory to explore the if expression. In the src/lib.cairo file, input the following:

fn main() {

let number = 3;

if number == 5 {

println!("condition was true and number = {}", number);

} else {

println!("condition was false and number = {}", number);

}

}

All if expressions start with the keyword if, followed by a condition. In this case, the condition checks whether or not the variable number has a value equal to 5. We place the block of code to execute if the condition is true immediately after the condition inside curly brackets.